Artificial Intelligence, Machine Learning, and Deep learning are closely related concepts within the field of computer science, but they have distinct differences.

What is Machine Learning?

Instead of relying on explicit instructions, machine learning algorithms learn patterns and relationships directly from the data they are exposed to.

These algorithms analyze large amounts of data, identify patterns, and make informed predictions or take actions based on those patterns

Basically, we can (for now) categorize Machine Learning into:

• Supervised Learning

• Unsupervised Learning

• Others (Reinforcement Learning, Recommender Systems, etc.)

Supervised Learning

Un-Supervised Learning

Regression

Regression is a statistical technique used in data analysis and machine learning to model the relationship between a dependent variable and one or more independent variables. Its primary goal is to understand and quantify how changes in the independent variables are associated with changes in the dependent variable.

The key components of regression analysis include:

Dependent Variable (Response Variable): This is the variable that you want to predict or explain. It is the outcome or target variable that you are trying to understand.

Independent Variable(s) (Predictor Variable(s)): These are the variables that you believe may have an influence on the dependent variable. In simple linear regression, there's one independent variable, while in multiple linear regression, there are two or more.

Regression Equation: The relationship between the independent variable(s) and the dependent variable is expressed through a mathematical equation. The equation takes the form of a straight line (in simple linear regression) or a plane (in multiple linear regression) and can be written as

- Y is the dependent variable.

- X1, X2, ... are the independent variables.

- β0 is the intercept (the value of Y when all independent variables are 0).

- β1, β2, ... are the coefficients representing the effect of each independent variable.

- ε is the error term, representing the unexplained variability in the data.

The goal in regression analysis is to estimate the coefficients (β0, β1, β2, ...) that best fit the data and to make predictions or inferences based on the model.

Example:

Model Representation

How do we represent h ?

h represents the hypothesis in our context. Given x as the size of a house, our objective is to calculate y, which corresponds to the price of the house. With a set of available examples, we aim to derive an equation that can generalize this relationship. Given that we are dealing with only one independent variable, linear regression is a suitable method for this purpose.

we know from slope intercept form that we can represent the line as:

y = mx + b

- `y` represents the dependent variable (usually the output or response variable).

- `x` represents the independent variable (usually the input or predictor variable).

- `m` represents the slope of the line.- `b` represents the y-intercept, which is the value of `y` when `x` is zero.Therefore we can write h as:

Cost Function

We have both a training set and a hypothesis at our disposal, a natural question emerges: How should we select the θi's?

Choose θ0 , θ1 so that hθ is close to y for our training examples (x, y)

Here is the formal definition of Cost Function:

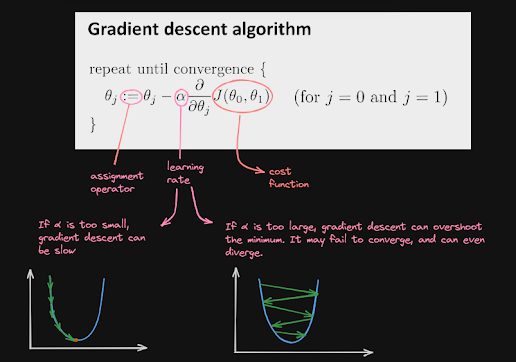

A cost function, also known as a loss function or objective function, is a critical concept in various fields, including machine learning, optimization, and statistics. It is a mathematical function that quantifies the difference between the predicted or estimated values (often produced by a model) and the actual values (ground truth or target values) in a dataset. The primary purpose of a cost function is to measure the performance or accuracy of a model or algorithm.

In the context of machine learning and optimization, the cost function plays a central role in the training and evaluation of models. The general idea is to find model parameters or settings that minimize the cost function, indicating that the model's predictions are as close as possible to the actual values.

In linear regression, the Mean Squared Error (MSE) is a common cost function

.png)

Comments

Post a Comment